Text2Scene

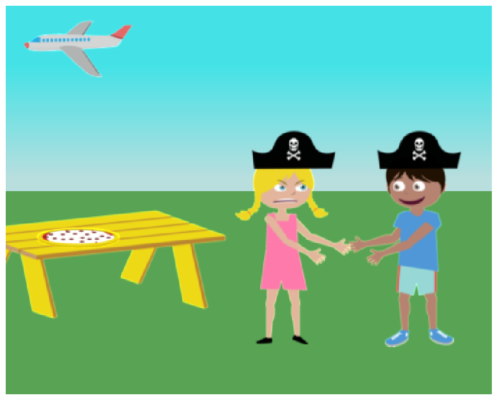

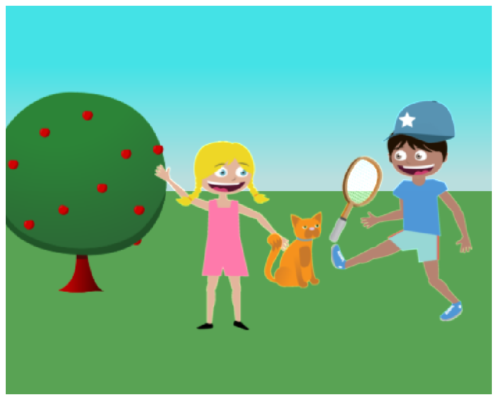

Text2Scene was proposed in a paper by our group at CVPR 2019 as Text2Scene: Generating Compositional Scenes from Textual Descriptions. This model takes as input textual descriptions of a scene and generates the scene graphically object by object using a Recurrent Neural Network, highlighting their ability to learn complex and seemingly non-sequential tasks. The more advanced version of our model requires more computing but can also produce real images by stitching segments from other images. Read more about Text2Scene in the in the research blogs of IBM and NVIDIA and download the full source code from https://github.com/uvavision/Text2Scene. This demo generates cartoon-like images using the vocabulary and graphics from the Abstract Scenes dataset proposed by Zitnick and Parikh in 2013.

The Text2Scene model was proposed by our group in CVPR 2019 paper titled Text2Scene: Generating Compositional Scenes from Textual Descriptions. This model combines pieces of images from the COCO Dataset and creates new images with them by stitching them into a new image. This demo generates cartoon-like images using the vocabulary and graphics from the Abstract Scenes dataset proposed by Zitnick and Parikh.